This broadcast from tomorrow is about robots. Those damn things are near about everywhere. In fact, in the future, there may be a robot for virtually every physical job to be done. That’s a fairly large addressable market up for grabs.

In my AI and Deep Tech research, I have uncovered so many different amazing companies large and small across a wide array of applications. But what I realized only after stepping back a bit was how wide-spread autonomous robotics was among them. Let me show you what I mean.

First, let’s start with some definitions:

“Robots are physical agents.” — Stuart Russell and Peter Norvig in their legendary textbook Artificial Intelligence: A Modern Approach.

Autonomous robots are different from, say, human-supervised or human-operated robots in that they perform their task(s) without any explicit human direction or oversight.

To unpack this category, let’s start with the obvious: Humanoids.

This includes players like Figure, Tesla and Agility.

Must watch: Figure’s latest demo

Speech-to-speech interface ← Talk and it talks back.

End-to-end neural net ← All its actions are inferred or generated similar to how LLMs work. In other words, autonomous not human-assisted movement.

1x speed ← meaning the robot’s reactions and movements in the video are in true real-time.

Sign of the times: Agility is most well-known as Amazon’s warehouse robotics partner and now a general purpose partner of NVIDIA. (The robot wars have started!) They just ran a crew of robots tote moving continuously for 3.5 days at supply chain conference Modex 2024 in order to… I don’t know, prove a pretty clear point, I guess?

Honorable mentions: though not humanoid, I thought worth including these for reference:

Bear Robotics, the restaurant robot

Simbe, the grocery clerk robot

Then there are folks saying, hey humanoids are one way to skin the cat, but many physical challenges are best solved or can only be solved through different design approaches. Here are some of those:

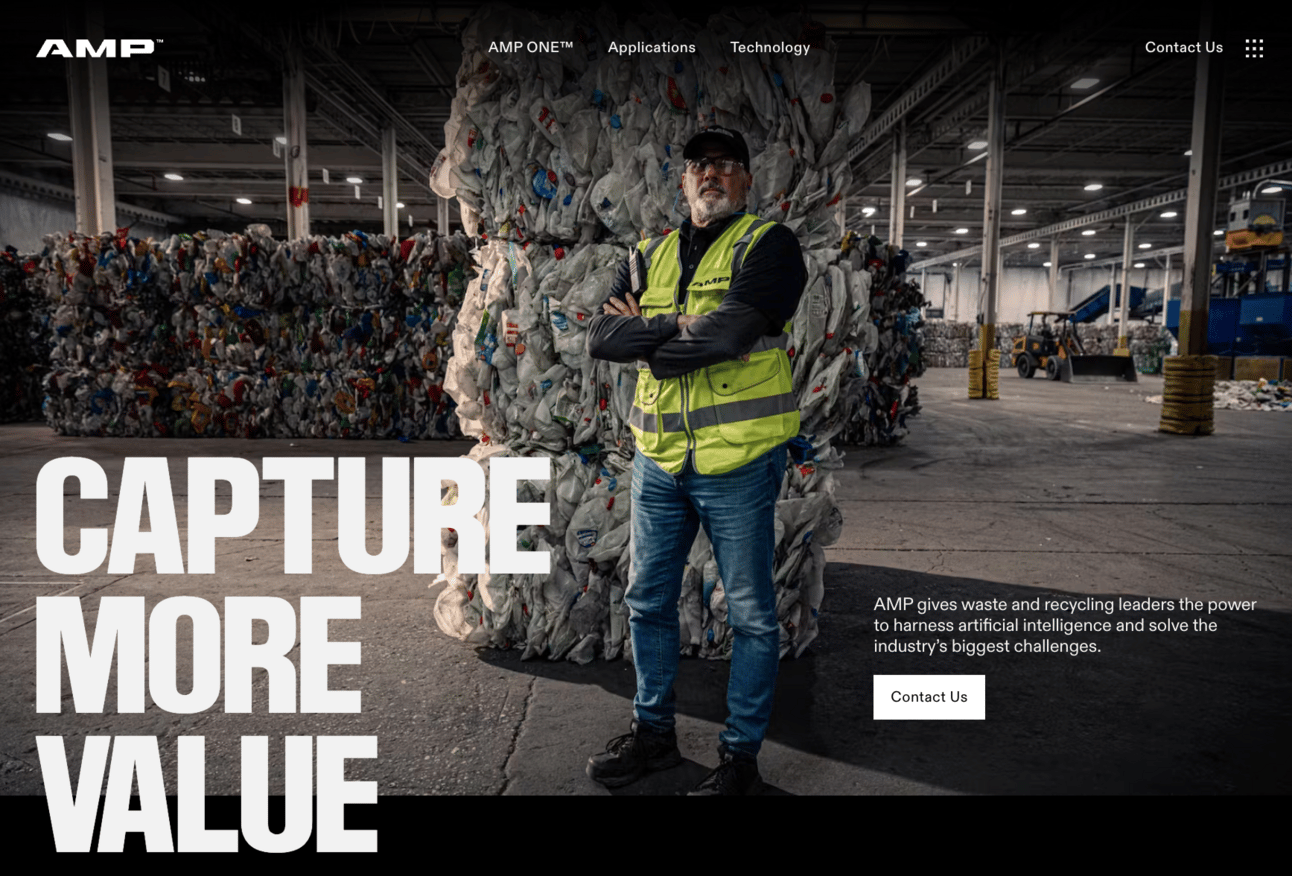

The Whole Room Approach: Take AMP Sortation. They recognized a serious problem with recycling. It turns out we’re all so bad at putting the correct things in the recycling bins that there needs to be an extensive manual sortation process before any recycling can even be done, and also due to this difficulty, a lot of potentially recyclable mass is deemed irretrievable and ultimately tossed as garbage. Instead with AMP, you toss all this mixed up refuse on a conveyer belt wired up with an array of computer vision-trained micro-air jets that visually detect different materials in the clump and gently nudge similar materials together for batch processing, increasing recycling yield while reducing time spent and, undoubtedly, some human drudgery as well, right? Hooray for the autonomous garbage-sorting robots, I say!

Watch video at nimbleone.io

The Fresh Approach: Check out Nimble One. They saw everyone go after Humanoids and said, what if we zag instead? And did they ever. Just look at this thing! It can maneuver in so many different ways, it’s remarkable. Like nothing you’ve ever seen before right? We’ll undoubtedly see more unexpected robots in the future like this.

Autonomous lawn mower

The Vehicle Approach: Then sometimes we want our cars and trucks to be autonomous (aka full self-driving or FSD). Beyond that there are of course drones for both air and sea as well. There are also autonomous satellites. This category could include autonomous construction vehicles, lawn mowers, and vacuums.

The DIY Approach: I can’t describe this category any other way, and I do it with love and inspiration because to me it shows how garage-accessible modern robotics is for anyone willing to work hard enough to harness the available knowledge and relatively affordable technology. Amazing! Two of my favorites:

Reflect Orbital: Built a robot mirror capable of positioning and repositioning itself in real-time autonomously as the sun, Earth, and its hot air balloon ride all moved relative to each other in order to maintain a consistent beam of reflected sunlight onto solar farms in order to increase to energy yields. Video says it all.

Built Robotics: Converted an “off-the-shelf” excavator into an autonomous pile driver purpose-built for solar farm installations. Modded the vehicle by adding a large rear rack for carrying steel rods, and a computer vision-equipped, GPS-guided pile driver rig on the end of the excavator arm where the claw would typically be. With this setup, the team says one machine can install 300 steel rods in one day compared to 100 for a human crew. Let that soak in.

But! It’s worth noting that Built is only focused on the solar pile-driving problem after pivoting from the ‘autonomous construction vehicles’ general problem writ-large. This is a broader reminder that robotics is hard and people have been working on it for a long time.

Construction sites pose a difficult challenge for the developers of AI and robotics technology. Construction tasks often involve manipulating objects in 3D, and they take place on sites in a state of continuous change, while automation is most successful carrying out repetitive tasks with predictable outcomes. Making safe self-driving vehicles that travel on well-mapped public roads, which change more slowly, is in some ways easier.

Built was founded in 2017. It’s worth noting how far machine learning has come since then, and how that’s impacted both LLMs and robotics. Here is a noteworthy example from Russell and Norvig’s 2021 textbook (just 3 years old, but still pre-dating the recent surge in GenAI and GPU development):

Important aspects of robot state are measured by force sensors and torque sensors. These are indispensable when robots handle fragile objects or objects whose exact size and shape are unknown. Imagine a one-ton robotic manipulator screwing in a light bulb. It would be all to be easy to apply too much force and break the bulb. Force sensors allow the root to sense how hard it is gripping the bulb, and torque sensors allow it to sense how hard it is turning. High-quality sensors can measure forces in all three translational and three rotational directions. They do this at a frequency of several hundred times a second so that a robot can quickly detect unexpected forces and correct its actions before it breaks a light bulb. However, it can be a challenge to outfit a robot with high-end sensors and the computational power to monitor them. (emphasis mine)

Outfitting robots with high-end sensors and the computational power to monitor them is not a challenge anymore! And force and torque sensors aren’t the only thing that benefit from that, practically everything about robotics improves when onboard computation improves. As we’ve seen demonstrated by Figure, Tesla, and even open-source academic projects by now, these problems are well within our technical reach now.

After all, Figure was only founded in 2022.

Then you consider NVIDIA’s latest chip announcement, which is rightly blowing people away, and you get reactions like this:

Next-order thoughts

Where does all this robotic autonomy get us anyway? Here are at least a few ideas in no particular order:

AI Swarms: Autonomous hoards, if you like. Imagine an entire road-building crew of robots working in tandem, or a city-building crew of robots working hard on Mars before we even get there, or a swarm of fighter jets carrying out a coordinated attack strike without any human pilots onboard.

That last one is real. See: Shield AI.

Agents + Robots: Russell and Norvig define robots as simply ‘physical agents,’ which makes sense, but as we know with software and how these LLM-based agents are developing in front of our eyes today, I believe we will see people appreciate the difference between the Agent (Software) and the Robot (Hardware). Your robot may need a repair, but while it’s in the shop, they’ll be able to loan you a clone replacement: your Agent loaded into a new machine. When the repair is done, your Agent will be reloaded into the original machine, and removed from the loaner. There’s already a lot of spilled ink on personal AIs and we’re still early here, but the addition of interacting with a physical machine will increase the personalization opportunities and the feeling of a personalized AI. As the robotic hardware naturally upgrades every so many years, you’ll assume that the new one boots up with all the memory and ‘personality’ of the last one, and it will. After all, that’s how Jarvis works! Hmm… the thought of decommissioning one’s robot, which on our current trajectory is perhaps something we’ll all end up doing routinely in our lifetimes, is an unsettling idea. I also think about this idea of talking to multiple machines but one agent, essentially merging this with the swarm idea above. For example, when doctors inevitably interact with autonomous medical robots, do you think they’ll address each device individually with a different name? Or will they simply give a command to one agent who then orchestrates the correct machines as necessary to execute the high-level task? It seems to me the latter. It’s interesting to think about how software and hardware develop within the field of robotics.

Scary corner: Cybersecurity. What if one robot, or a swarm of robots were hacked? Malfunctions? They are inherently inferring reason or judgement, and like humans, they’re not perfect! But given their physical capabilities, mistakes can be costly and even deadly. How do you safely turn off a rogue robot? “I'm sorry, Dave. I'm afraid I can't do that.”

Given the pace of development in AI and specifically with robots and “embodied AI”, one can see why Sam Altman might say that the best future outcome for humanity is “to merge” with AI, to become what some have called “posthuman”. There are companies pursuing this through wearable neuromodulators and implanted neurochips, like Neuralink. Another post for another day.

Whatever our fate in the long-term, the business opportunity for robotics companies is massive and unfolding in front of our eyes. How long before robots are in every military base, construction site, warehouse, airport, train station, stadium, office building, hospital, school, place of worship, neighborhood and home?

This question brings to mind the book Human Compatible by Stuart Russell, the same legendary textbook author above. He presents what’s known as the ‘control’ problem in AI, and his own unique perspective on how we should address it. Again, though, another post for another day.